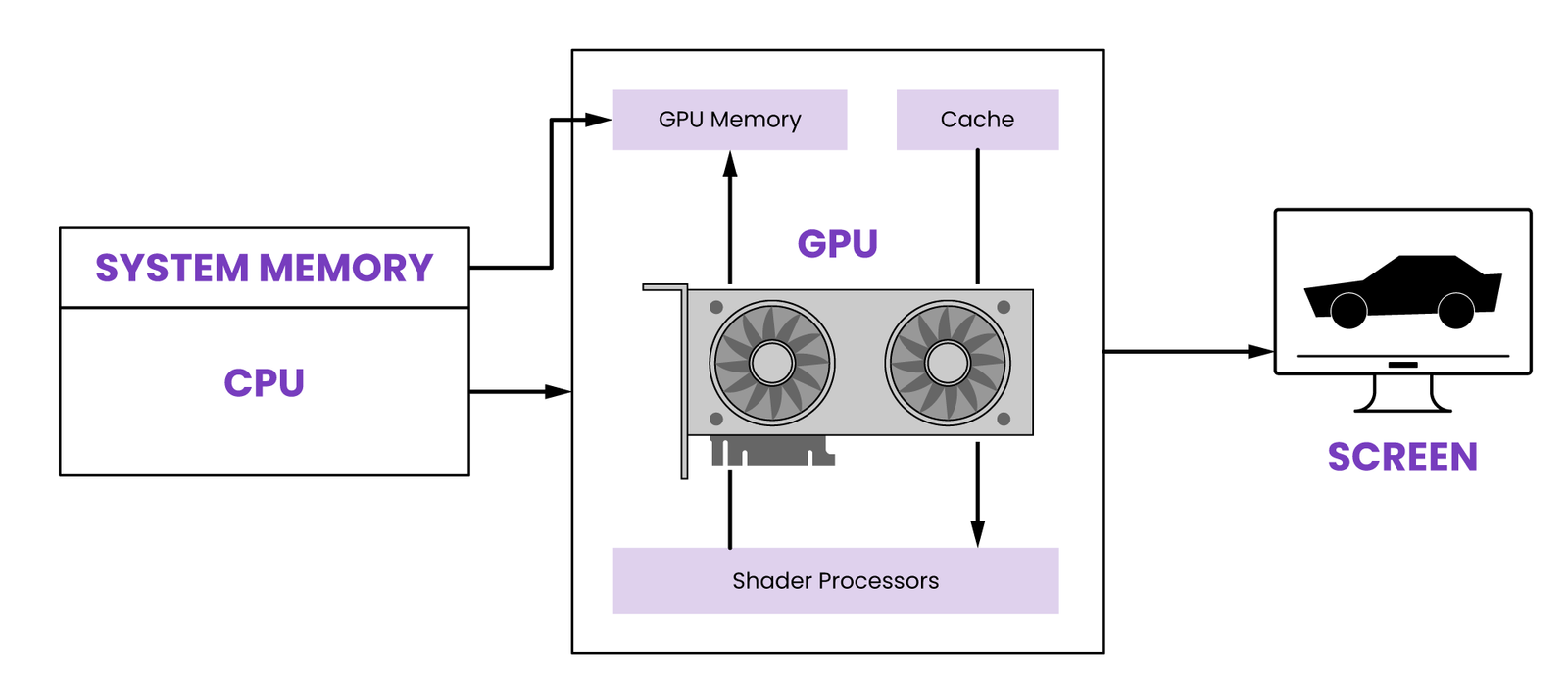

Shared GPU memory refers to the portion of a computer’s system memory that is allocated for use by the GPU. It allows the GPU to access and use system memory as needed for graphics processing.

Shared GPU memory is an essential component of modern computing systems, enabling efficient and seamless data transfer between the CPU and GPU. This shared memory architecture allows for faster data processing and improved performance in graphics-intensive tasks such as gaming, video editing, and 3D rendering.

By optimizing the use of system memory for graphic processing, shared GPU memory enhances overall system performance and responsiveness. Understanding the concept of shared GPU memory is crucial for maximizing the potential of graphics processing capabilities in modern computing devices.

Credit: www.amazon.com

How Shared Gpu Memory Works

Understanding how shared GPU memory works is essential for optimizing system performance. Shared GPU memory is a critical component in modern computing, enabling efficient data processing and graphical rendering. Below, we’ll explore the architecture, concept, and advantages of shared GPU memory.

Overview Of Gpu Memory Architecture

The GPU memory architecture consists of dedicated video memory, known as VRAM, and shared system memory. VRAM is solely dedicated to the GPU for storing graphical data and textures, while shared memory allows the GPU to access system memory when required for certain tasks.

Explanation Of Shared Memory Concept

Shared GPU memory involves the utilization of system RAM to supplement the dedicated VRAM, enabling more efficient handling of larger datasets and complex graphical workloads. This approach optimizes resource utilization and enhances overall system performance.

Benefits Of Shared Gpu Memory

- Improved versatility in handling diverse workloads

- Cost-effective memory utilization

- Enhanced support for multi-tasking and multitasking

Credit: www.facebook.com

Use Cases Of Shared Gpu Memory

Shared GPU memory refers to the system memory (RAM) that is used by the graphics processing unit (GPU) for various tasks. The utilization of shared memory in GPUs has diverse use cases across different applications, including gaming and GPU-accelerated computing. Let’s explore the practical applications of shared GPU memory in detail.

Shared Memory In Gaming

Shared GPU memory plays a vital role in enhancing the gaming experience, especially in scenarios where the dedicated GPU memory may not be sufficient. This shared memory allows games to store and access additional data for graphics rendering and other processing tasks, enabling smoother gameplay even on systems with limited dedicated GPU memory.

Shared Memory In Gpu-accelerated Computing

In GPU-accelerated computing, shared memory enables the GPU to efficiently handle large datasets by accessing system memory when the dedicated GPU memory is insufficient. This is particularly beneficial for high-performance computing tasks such as machine learning, scientific simulations, and data processing, where the seamless sharing of memory between the GPU and the system is crucial for optimal performance.

Challenges And Solutions

Shared GPU memory is a crucial aspect of graphics processing units, allowing multiple applications to use the GPU simultaneously. This efficient solution optimizes resource utilization and enhances overall performance while avoiding hardware conflicts.

Potential Issues With Shared Gpu Memory

Shared GPU memory can bring numerous benefits to the performance of applications and graphics-intensive tasks. However, it also presents several challenges that need to be addressed efficiently. Let’s explore some potential issues that arise when dealing with shared GPU memory and the solutions to overcome them. 1. Fragmentation: Fragmentation occurs when the shared GPU memory is divided into small, scattered pieces, making it difficult to allocate continuous blocks of memory for larger tasks. This can result in decreased performance and inefficient memory usage. To address this issue, developers can employ memory defragmentation techniques, which consolidate the scattered memory blocks to form larger, contiguous blocks. By reducing fragmentation, memory allocation becomes more efficient, leading to improved performance. 2. Limited memory capacity: Shared GPU memory is typically finite, and when multiple applications or tasks attempt to access it simultaneously, the available memory may become insufficient. This can lead to performance degradation or even application crashes. One solution to this challenge is implementing memory pooling techniques. Memory pooling involves allocating a fixed-size memory buffer that is shared among multiple tasks. This allows efficient utilization of memory resources while preventing oversubscription. Additionally, developers can also prioritize memory allocation to critical tasks or perform memory management optimizations to free unused memory promptly. 3. Data synchronization conflicts: When multiple tasks or applications access shared GPU memory concurrently, conflicts may arise due to simultaneous read and write operations. These conflicts can lead to data corruption or inconsistent results. To address data synchronization conflicts, developers can implement synchronization techniques such as locks, semaphores, or atomic operations. These mechanisms ensure that multiple tasks access the shared memory in a coordinated and controlled manner, eliminating data inconsistencies and corruption.Optimization Techniques For Efficient Utilization Of Shared Memory

Efficient utilization of shared GPU memory is crucial for maximizing performance and ensuring smooth execution of graphical applications. Here are some techniques that can aid in optimizing the utilization of shared memory: 1. Data compression: Compressing data before storing it in shared memory can help reduce memory footprint and increase the available space. Various compression algorithms, such as zlib or LZ4, can be employed to achieve efficient data compression without compromising performance. 2. Data locality: Promoting data locality involves organizing the data in shared memory in a way that minimizes memory accesses and maximizes cache hits. When data that is frequently accessed together is stored contiguously, it enhances memory performance and reduces memory latency. 3. Memory prefetching: Prefetching techniques involve predicting and fetching data that is likely to be accessed in the future, reducing the latency associated with memory fetches. By fetching data in advance and storing it in shared GPU memory, applications can leverage the benefits of reduced latency and improved performance. 4. Constant memory optimization: Constant memory is a type of shared GPU memory that stores read-only data, such as constants, lookup tables, or configuration parameters. Optimizing the usage of constant memory can minimize memory bandwidth requirements and improve overall performance by leveraging the high bandwidth and low latency of this memory type. In conclusion, understanding the challenges associated with shared GPU memory and employing appropriate solutions and optimization techniques is essential for achieving optimal performance in graphics-intensive applications. By addressing potential issues and maximizing the efficient utilization of shared memory, developers can unlock the full potential of GPU computing and deliver high-quality user experiences.

Credit: m.facebook.com

Future Trends And Advancements

As technology continues to evolve, the future of shared GPU memory holds immense potential. Emerging technologies in shared GPU memory are revolutionizing the capabilities of graphics processing, opening up new possibilities in various industries. Additionally, the increasing role of shared memory in artificial intelligence (AI) and machine learning is transforming the way we create, analyze, and process data. Let’s delve into these exciting advancements and explore the potential they hold.

Emerging Technologies In Shared Gpu Memory

- Rapid advancements in parallel processing: With shared GPU memory, parallel processing is becoming even more powerful, enabling faster data transfer and superior performance.

- Hybrid memory architectures: The development of hybrid memory architectures, combining GPU and CPU memory, allows for efficient sharing of resources and improved overall system performance.

- Virtualized shared memory: Virtualization technology is transforming the way we use shared GPU memory. By virtualizing the memory resources, multiple users can efficiently share the GPU memory, increasing flexibility and utilization.

- Advanced memory management techniques: Cutting-edge memory management techniques, such as page migration and memory compression, further enhance the efficiency and utilization of shared GPU memory.

Increasing Role Of Shared Memory In Ai And Machine Learning

In the rapidly advancing fields of AI and machine learning, shared GPU memory plays a crucial role in pushing the boundaries of what’s possible. Here’s how:

- Accelerating training processes: Shared GPU memory allows for the simultaneous processing of large datasets, resulting in faster training of AI models and reduced time-to-insight.

- Enhanced model complexity: With shared memory, AI and machine learning models can handle more complex tasks by utilizing the increased computational power and memory capacity of GPUs.

- Real-time decision-making: Shared GPU memory facilitates real-time processing and decision-making, enabling AI systems to analyze vast amounts of data and respond instantaneously to changing circumstances.

- Optimized resource utilization: The ability to share GPU memory among multiple AI and machine learning tasks ensures efficient resource allocation, maximizing the utilization of hardware resources.

Frequently Asked Questions Of What Is Shared Gpu Memory

What Is Shared Gpu Memory?

Shared GPU memory refers to the portion of a computer’s memory that is allocated for use by the graphics processing unit (GPU). It is a dedicated memory that is shared by the GPU and the CPU, allowing both components to access and utilize the memory efficiently.

How Does Shared Gpu Memory Work?

Shared GPU memory works by allocating a portion of the computer’s RAM for use by the GPU. This allows the GPU to store and access data that is necessary for rendering graphics and performing other computational tasks. The shared memory is dynamically allocated based on the needs of the GPU and CPU, ensuring efficient utilization of resources.

What Are The Benefits Of Shared Gpu Memory?

Shared GPU memory offers several benefits. It allows for improved performance in graphics-intensive applications by providing fast access to data. It also enables efficient sharing of data between the GPU and CPU, reducing the need for data transfers and improving overall system performance.

Additionally, shared GPU memory enables smoother multitasking and better resource management.

How Is Shared Gpu Memory Different From Dedicated Gpu Memory?

Shared GPU memory is a portion of the computer’s main memory that is allocated for use by the GPU. It is shared and used by both the GPU and CPU. In contrast, dedicated GPU memory is a separate memory that is exclusively used by the GPU.

Dedicated GPU memory often provides faster access to data, but shared GPU memory allows for more efficient utilization of system resources.

Conclusion

Shared GPU memory is a significant aspect of graphics processing units that enables efficient utilization and allocation of memory resources. By allowing multiple applications to concurrently access and share the GPU’s memory space, it enhances computational performance and optimizes memory utilization.

With shared GPU memory, applications can seamlessly communicate and exchange data, resulting in improved overall system efficiency and enhanced user experience. Embracing shared GPU memory is crucial for achieving high-performance computing and unlocking the full potential of GPU technology in various fields, such as gaming, AI, and scientific simulations.