To set up a GPU for deep learning, install the necessary drivers and libraries, such as CUDA and cuDNN, and configure the deep learning framework to utilize the GPU for training and inference. Deep learning requires powerful hardware to process complex algorithms efficiently, and setting up a GPU for deep learning is essential for accelerating model training and inference.

With the increasing popularity of deep learning in various industries, harnessing the computational power of GPUs has become crucial for achieving optimal performance. This article provides a step-by-step guide on how to set up a GPU for deep learning, including the installation of compatible drivers and libraries, configuring the deep learning framework, and optimizing the GPU settings for maximum performance.

By following these instructions, you can effectively leverage the capabilities of GPUs to accelerate your deep learning workflows and achieve better results.

Credit: towardsdatascience.com

1. Why Use Gpus For Deep Learning

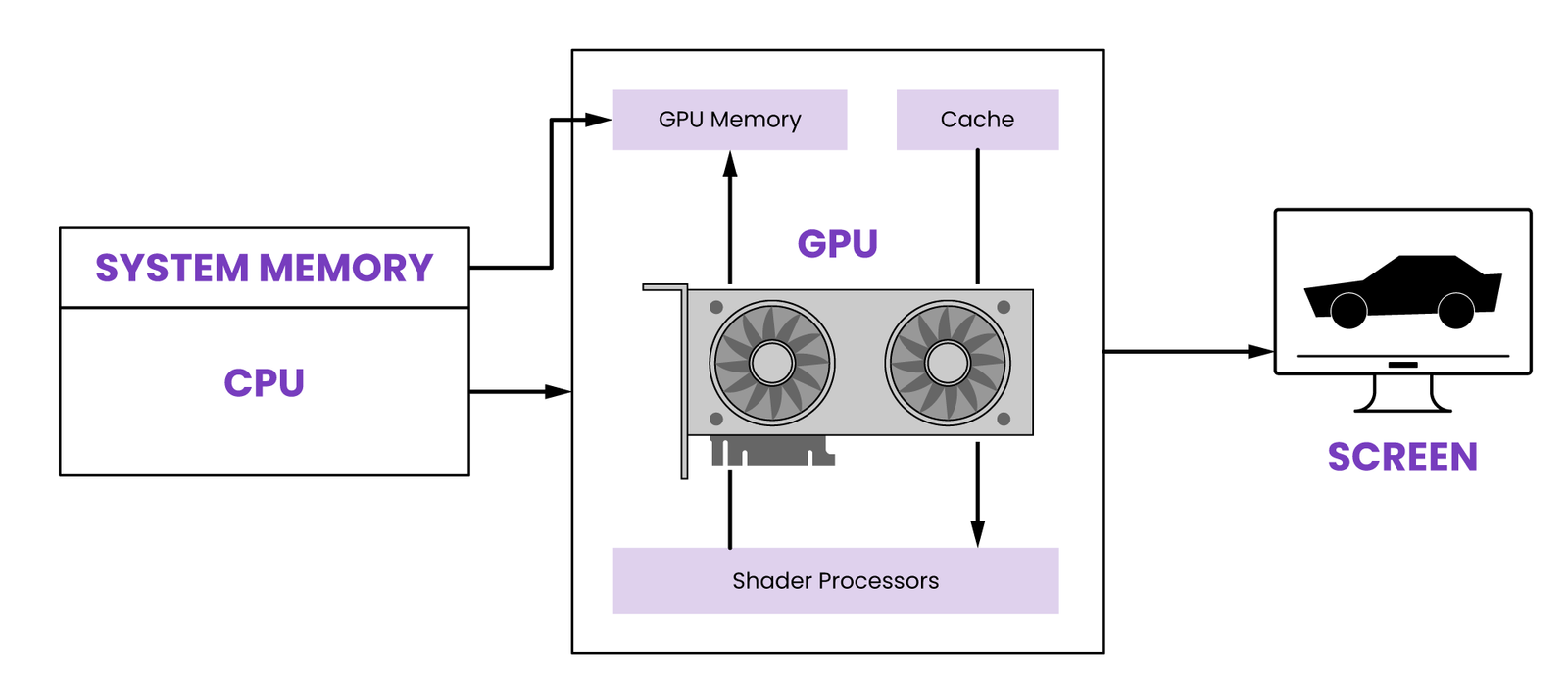

When it comes to deep learning, GPUs (graphics processing units) offer significant advantages over traditional CPUs. The use of GPUs for deep learning has become increasingly popular due to their ability to accelerate the training and inference processes, handle large datasets, and leverage parallel processing power.

1.1 Faster Training And Inference

GPU acceleration allows for faster training and inference by handling complex mathematical computations in parallel, significantly reducing the time taken to process data and train deep learning models.

1.2 Ability To Handle Large Datasets

GPUs are highly efficient in handling large datasets, enabling deep learning algorithms to process and analyze extensive amounts of data with speed and precision.

1.3 Parallel Processing Power

The parallel processing power of GPUs enables deep learning systems to execute multiple tasks simultaneously, thereby improving overall efficiency and workflow of the algorithms.

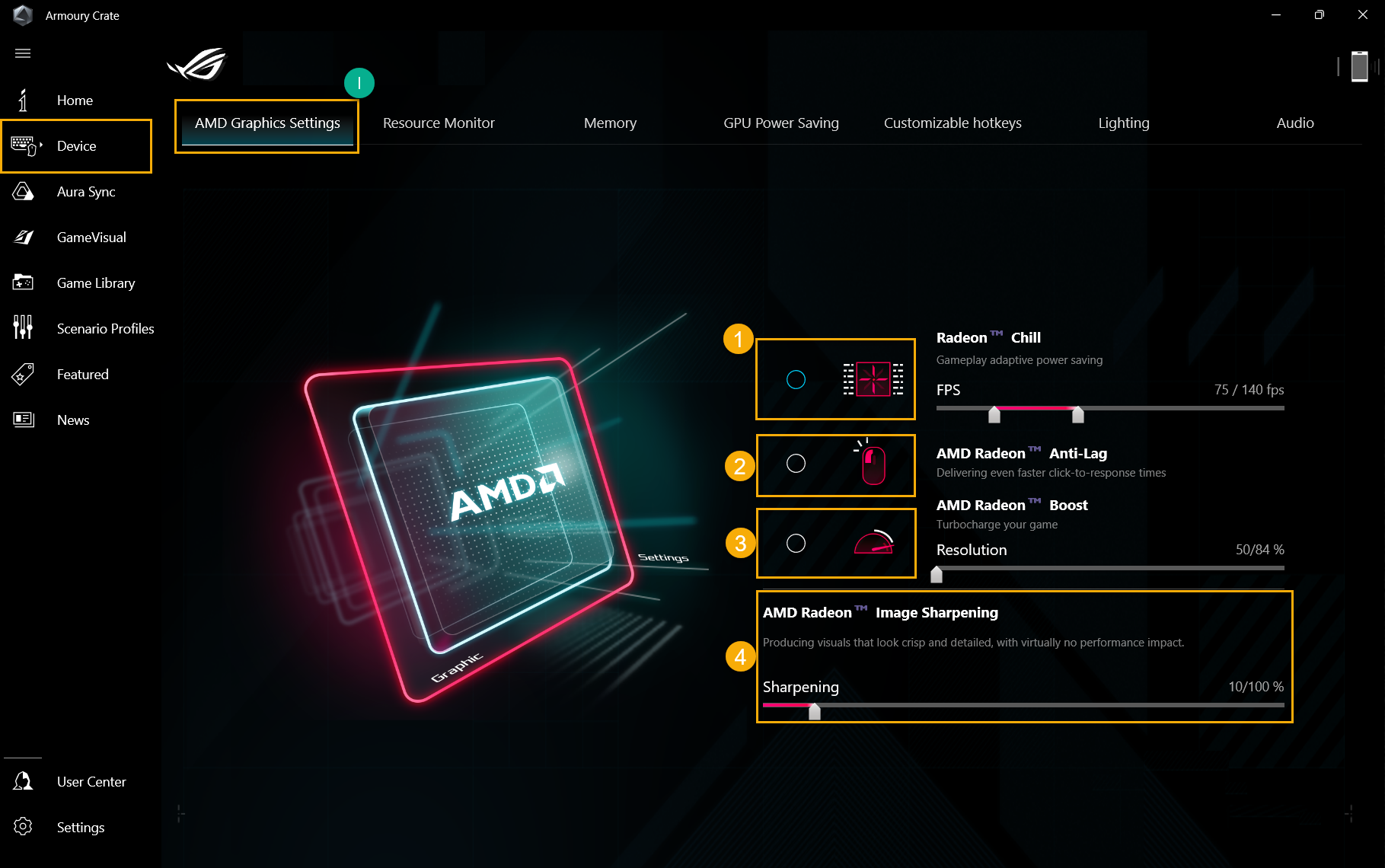

Credit: rog.asus.com

2. Choosing The Right Gpu

When setting up a GPU for deep learning, selecting the right GPU is crucial for optimal performance and efficient processing. In this section, we will explore the considerations for choosing a GPU and popular GPU options for deep learning.

2.1 Considerations For Selecting A Gpu

When considering a GPU for deep learning, there are several key factors to keep in mind:

- GPU Memory: Ensure the GPU has sufficient memory to handle the large datasets commonly used in deep learning tasks.

- Compute Capability: Look for GPUs with high compute capability, as this is essential for accelerating deep learning algorithms.

- Compatibility: Consider the compatibility of the GPU with the deep learning frameworks and libraries you intend to use.

- Power Consumption: Evaluate the power consumption of the GPU to ensure it aligns with your system and energy requirements.

- Price-Performance Ratio: Assess the cost-effectiveness of the GPU in relation to its performance for deep learning tasks.

2.2 Popular Gpu Options For Deep Learning

When it comes to popular GPU options for deep learning, several models stand out for their performance and compatibility with deep learning frameworks:

| GPU Model | Memory | Compute Capability |

|---|---|---|

| NVIDIA RTX 2080 Ti | 11 GB GDDR6 | 7.5 |

| NVIDIA GTX 1080 Ti | 11 GB GDDR5X | 6.1 |

| AMD Radeon VII | 16 GB HBM2 | 9.0 |

These GPUs are widely favored for deep learning tasks due to their high memory capacity and strong compute capabilities, making them suitable for training complex neural networks.

3. Setting Up Your Gpu For Deep Learning

Setting up your GPU correctly is crucial for efficient deep learning and ensuring smooth processing of complex algorithms. In this section, we will guide you through the steps required to optimize your GPU for deep learning operations. Follow these steps carefully to make the most of your GPU’s capabilities.

3.1 Installing Gpu Drivers

To begin, you need to install the appropriate GPU drivers for your system. These drivers enable your operating system to communicate effectively with your GPU. Here’s how you can install the GPU drivers:

- Identify the make and model of your GPU. You can usually find this information in your computer’s documentation or by checking the manufacturer’s website.

- Visit the official website of the GPU manufacturer and navigate to the “Drivers” or “Downloads” section.

- Search for the appropriate driver for your GPU model and operating system.

- Download the driver and follow the installation instructions provided by the manufacturer.

- Restart your computer to complete the installation process.

3.2 Installing Cuda Toolkit

The CUDA Toolkit is a software development kit provided by NVIDIA that includes libraries, tools, and frameworks specifically designed for GPU-accelerated computing. Follow these steps to install the CUDA Toolkit:

- Visit the NVIDIA Developer website and navigate to the CUDA Toolkit download page.

- Choose the appropriate version of the CUDA Toolkit for your operating system.

- Download the installer and run it.

- Follow the on-screen instructions to complete the installation process.

- Restart your computer to apply any changes.

3.3 Configuring Deep Learning Libraries

Once you have installed the necessary drivers and tools, it’s time to configure the deep learning libraries to utilize your GPU effectively. The popular deep learning libraries, such as TensorFlow and PyTorch, provide GPU support out of the box. Follow the library-specific documentation to configure the libraries to use your GPU:

- TensorFlow: Refer to the official TensorFlow documentation for instructions on configuring GPU support.

- PyTorch: Visit the PyTorch website and follow the installation guide to enable GPU support.

3.4 Troubleshooting Common Issues

It’s not uncommon to encounter issues while setting up your GPU for deep learning. Here are some common problems and their possible solutions:

| Issue | Possible Solution |

|---|---|

| GPU not recognized | Ensure that the GPU is properly connected and seated in the PCIe slot. Check if the GPU drivers are installed correctly. |

| Code execution errors | Verify that the deep learning libraries are properly configured to use the GPU. Check for any compatibility issues between the libraries and your GPU drivers. |

| Overheating or performance issues | Clean the GPU’s cooling system and ensure proper ventilation. Configure appropriate power settings to prevent overheating. Consider upgrading to a more powerful GPU if necessary. |

By following these steps and troubleshooting common issues, you can optimize your GPU for deep learning and unlock its full potential in accelerating your training and inference processes.

4. Optimizing Deep Learning Workflow With Gpus

When it comes to optimizing the deep learning workflow, GPUs play a crucial role. GPUs, or Graphics Processing Units, are highly parallel processors that can perform multiple calculations simultaneously. This parallel processing power makes GPUs ideal for training and running deep learning models, as they can handle the complex computations involved more efficiently than traditional CPUs.

4.1 Data Preprocessing And Augmentation

Data preprocessing and augmentation are essential steps in deep learning, as they help improve model performance and generalization. Before training a deep learning model, it is crucial to ensure that the data is properly prepared and transformed. This involves tasks such as normalizing the data, handling missing values, and converting categorical variables into numerical representations.

Data augmentation techniques, such as image rotation, flipping, and cropping, can also be applied to increase the diversity of the training data and reduce the risk of overfitting. GPUs are particularly beneficial for these tasks, as they can accelerate the preprocessing and augmentation operations, allowing for faster model training and evaluation.

4.2 Model Selection And Architecture

Choosing the right deep learning model architecture is key to achieving optimal performance. Model selection involves deciding which type of deep learning model, such as convolutional neural networks (CNNs) or recurrent neural networks (RNNs), is most suitable for the specific task at hand. GPUs enable researchers and practitioners to experiment with and train various models efficiently, speeding up the model selection process.

Additionally, GPUs can support the training of more complex and deeper networks, as they can handle the increased computational demands of these models. This flexibility allows deep learning practitioners to explore different architectures and make informed decisions based on performance metrics, such as accuracy and convergence rate.

4.3 Hyperparameter Tuning

Hyperparameter tuning is the process of finding the optimal values for the parameters that are not learned during the training process. These parameters include learning rate, batch size, regularization strength, and activation functions. Tuning these hyperparameters can significantly impact the performance of the deep learning model.

Using a GPU for hyperparameter tuning can speed up the process by allowing researchers to run multiple training iterations in parallel. GPUs enable faster convergence and evaluation of different parameter configurations, enabling practitioners to find the best set of hyperparameters more efficiently.

4.4 Monitoring And Visualization

Monitoring and visualization are essential for ensuring that the deep learning model is training effectively and producing desired results. Monitoring involves tracking various performance metrics, such as loss and accuracy, during the training process. Visualization techniques, such as plotting training curves and examining the learned representations, can provide valuable insights into the model’s behavior.

Using GPUs for monitoring and visualization tasks can accelerate the generation of visualizations and enable real-time tracking of training progress. Deep learning practitioners can quickly identify potential issues, such as overfitting or underfitting, and make necessary adjustments to improve model performance.

5. Advanced Techniques For Gpu-based Deep Learning

When it comes to GPU-based deep learning, there are several advanced techniques that can enhance the overall performance and efficiency. These techniques allow you to make the most out of your GPU resources, maximize training speeds, and achieve better results. In this section, we will explore 5 advanced techniques that can take your deep learning models to the next level.

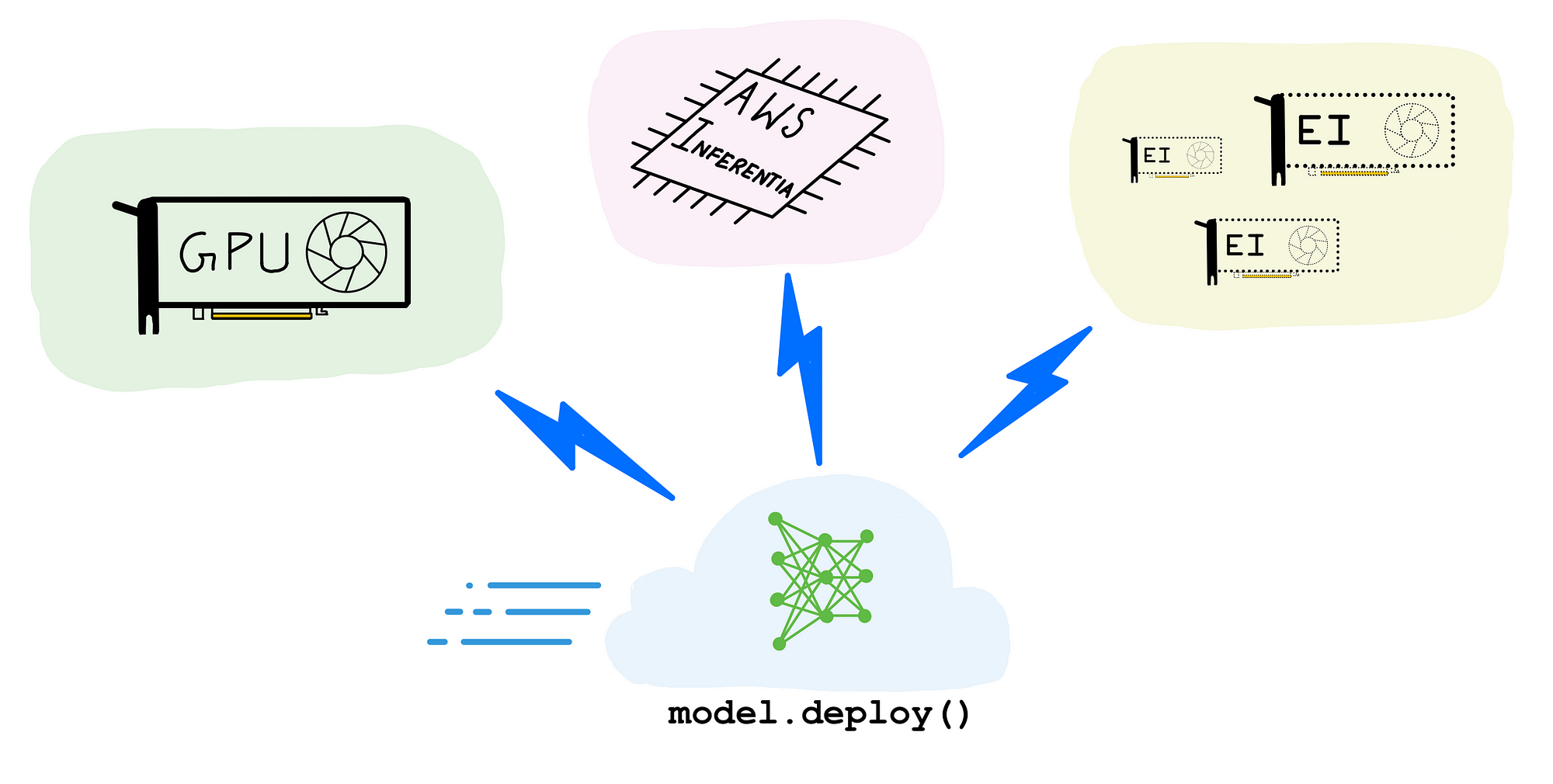

5.1 Distributed Training

Distributed training involves training deep learning models using multiple GPUs or even multiple machines. By spreading the workload across multiple devices, you can significantly reduce the training time and scale up the training process.

There are various ways to implement distributed training, including data parallelism and model parallelism. In data parallelism, each GPU receives a subset of the data, and the gradients are computed independently. The gradients are then synchronized across all GPUs to update the model parameters. On the other hand, model parallelism involves splitting the model across multiple GPUs, where each GPU is responsible for a specific portion of the model’s computations.

Using distributed training techniques, you can train larger models, process larger datasets, and achieve faster convergence. It is an effective way to overcome the limitations of individual GPUs and tackle complex deep learning tasks.

5.2 Mixed Precision Training

Mixed precision training is a technique that combines different numerical precisions (e.g., 16-bit and 32-bit) to accelerate training without sacrificing model accuracy. By using lower precision for certain parts of the training process, such as the gradients or the weights, you can reduce memory requirements and improve computational efficiency.

Mixed precision training leverages the capabilities of newer GPU architectures, which support specialized hardware operations for mixed precision computations. It allows you to speed up training without compromising the quality of your models.

5.3 Transfer Learning

Transfer learning is a technique where pre-trained deep learning models are used as a starting point for training a new model on a different task or dataset. This approach is particularly useful when you have limited labeled data or when training from scratch is time-consuming.

By leveraging the knowledge and representations learned from pre-trained models, you can build upon their features and fine-tune the model to the specifics of your target task. This not only saves time but can also improve the generalization and performance of your models.

5.4 Gans And Reinforcement Learning

GANs (Generative Adversarial Networks) and reinforcement learning are two advanced techniques that have gained significant attention in the field of deep learning. GANs involve training two neural networks, a generator and a discriminator, to learn to generate realistic and high-quality synthetic data.

Reinforcement learning focuses on training agents to take actions in an environment to maximize a reward signal. It involves an iterative process where the agent learns from trial and error, exploring different actions to find the optimal strategy.

Both GANs and reinforcement learning have shown promising results in various applications, including image generation, natural language processing, and game playing. They offer exciting possibilities for advancing deep learning techniques and pushing the boundaries of what is possible with GPUs.

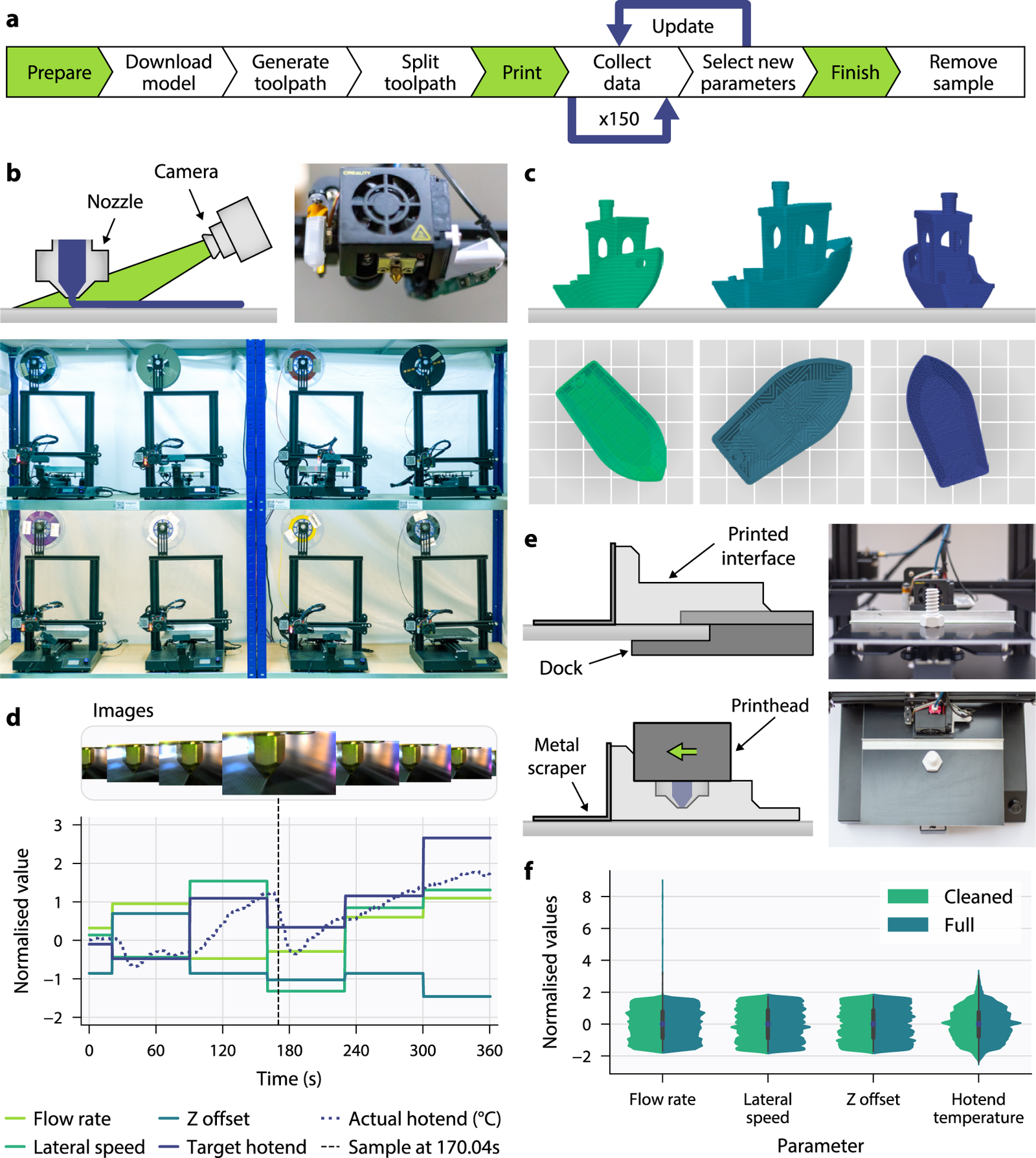

Credit: www.weka.io

Frequently Asked Questions Of How To Setup Gpu For Deep Learning

What Is Gpu And Why Is It Important For Deep Learning?

A GPU, or a Graphics Processing Unit, is a specialized electronic circuit that accelerates the creation and rendering of images, videos, and animations. In deep learning, GPUs play a crucial role by speeding up the training process of neural networks, allowing for faster and more efficient model development.

How Do I Choose The Right Gpu For Deep Learning?

When choosing a GPU for deep learning, several factors are important to consider, including memory capacity, computational power, and compatibility with deep learning frameworks like TensorFlow or PyTorch. It’s recommended to choose a GPU with high memory capacity and a powerful GPU chip, such as NVIDIA’s GeForce or Tesla series, to ensure optimal performance.

What Are The Steps To Set Up A Gpu For Deep Learning?

To set up a GPU for deep learning:

1. Install the latest GPU drivers from the manufacturer’s website. 2. Download and install deep learning libraries like CUDA and cuDNN. 3. Set up a deep learning framework such as TensorFlow or PyTorch. 4. Verify the GPU is recognized and accessible by running a sample deep learning script. 5. Install any additional software or libraries required for your specific deep learning project.

Can I Use An External Gpu For Deep Learning On A Laptop?

Yes, it’s possible to use an external GPU for deep learning on a laptop. External GPU enclosures, such as the Razer Core or ASUS ROG XG Station, allow you to connect a high-performance GPU to your laptop via Thunderbolt or USB-C ports.

This enables you to harness the power of a desktop-grade GPU for deep learning tasks, even on a portable laptop.

Conclusion

To effectively set up your GPU for deep learning, prioritize selecting the right hardware, ensuring compatibility with your system, and optimizing power settings. Stay updated with the latest GPU drivers and leverage frameworks like TensorFlow and PyTorch for efficient computing.

Balance memory and processing requirements to maximize performance. By following these tips, you can enhance your deep learning experiences and achieve better results. Experiment, learn, and adapt to the ever-evolving world of GPU-driven deep learning.